Executive Summary

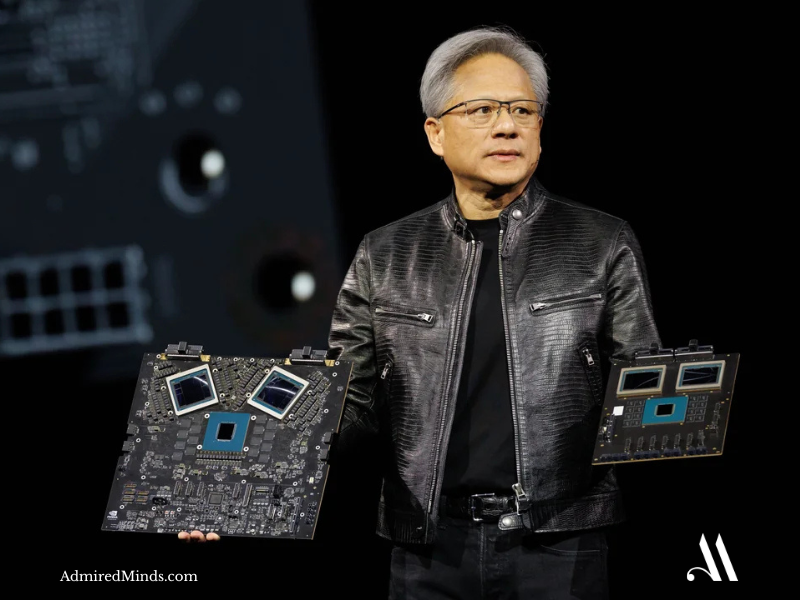

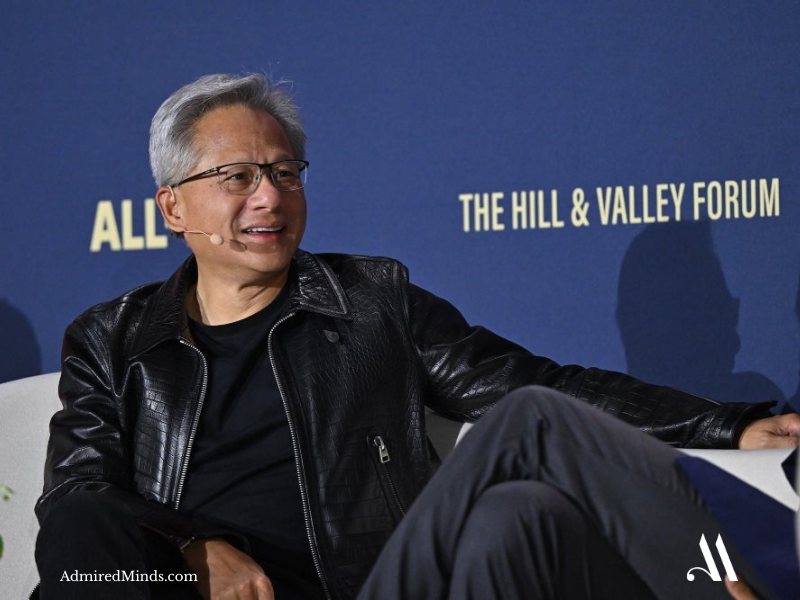

Beginning in 2012, NVIDIA CEO Jensen Huang executed a fundamental strategic transformation that repositioned the company from graphics processing specialization to artificial intelligence infrastructure leadership, betting the company’s entire future on AI acceleration despite uncertain market timing and massive R&D investment requirements. This case study examines how visionary technology pivots during early market development can create trillion-dollar market leadership positions through sustained innovation commitment.

“Bold decisions made before the world understands them often build the foundations of future empires.”

Market Context and Financial Impact Assessment

Pre-Transformation Market Position

- NVIDIA controlling 70%+ discrete graphics card market for gaming and professional workstations

- Annual revenue of $4.3 billion primarily from GPU sales to consumers and enterprises

- Emerging competition from AMD in graphics processing and Intel in integrated solutions

- Early AI research communities beginning to experiment with GPU computing for neural networks

AI Transformation Metrics and Investment Scope

- Strategic Pivot Timeline: 2012‚ 2016 foundational AI infrastructure development

- R&D Investment: $20+ billion cumulative spending on AI-specific chip architectures

- Market Creation: Artificial intelligence training and inference acceleration industry

- Revenue Transformation: Gaming-centric to data center AI infrastructure focus

- Competitive Positioning: First-mover advantage in AI chip optimization and software ecosystem

“Vision is not predicting the future‚ it’s building a future others cannot yet see.”

Strategic Decision Framework Analysis

Critical Assessment Parameters

Huang’s transformation team identified three fundamental technology convergence opportunities:

- Computing Paradigm Shift: Parallel processing advantages for machine learning workloads over traditional CPUs

- AI Research Acceleration: Academic and industry demand for faster neural network training capabilities

- Data Center Evolution: Enterprise infrastructure requiring specialized AI processing for competitive advantage

Strategic Options Evaluation Matrix

| Option | Approach | Market Risk | Investment Requirements |

| Graphics Focus | Continue gaming and professional GPU optimization | Low | Moderate |

| Diversification Strategy | Balanced investment across multiple computing segments | Moderate | High resource dilution |

| AI-First Transformation | Complete pivot to artificial intelligence acceleration | High | Massive R&D commitment |

| Acquisition Strategy | Purchase AI startups and integrate technologies | Moderate | High capital requirements |

Implementation Strategy and Resource Allocation

Six-Pillar AI Infrastructure Framework

1. Specialized AI Chip Architecture Development and Manufacturing Excellence

- CUDA Platform: Parallel computing architecture enabling AI workload acceleration

- Tensor Cores: Dedicated AI processing units optimized for neural network mathematics

- Advanced Manufacturing: 7nm, 5nm, and 4nm chip fabrication partnerships with TSMC

2. Software Ecosystem Creation and Developer Platform Leadership

- CUDA SDK: Comprehensive development tools for AI researchers and engineers

- cuDNN Library: Deep neural network primitives optimizing training performance

- TensorRT: Inference optimization platform for production AI deployments

3. Data Center Infrastructure Strategy and Enterprise Partnerships

- DGX Systems: Complete AI training infrastructure for enterprise customers

- Cloud Integration: Partnerships with AWS, Azure, Google Cloud

- Networking Solutions: High-speed interconnects for distributed AI clusters

4. Academic Research Community Engagement and Talent Development

- University Programs: GPU donations and AI research partnerships

- Developer Conferences: GTC AI community leadership

- Research Collaborations: Partnerships with Stanford, MIT, and others

5. Vertical Market Expansion and AI Application Development

- Autonomous Vehicles: Self-driving car computing platforms

- Healthcare AI: Medical imaging and drug discovery acceleration

- Robotics: AI-powered robotics development tools

6. Manufacturing Scale and Supply Chain Optimization

- Fab Partnerships: Cutting-edge semiconductor production

- Component Integration: Memory, networking, and cooling

- Global Distribution: Worldwide data center supply chain

“Great technology companies are not built by chance‚ they are built by decades of disciplined reinvention.”

Enhanced AI Platform and Revenue Architecture

Multi-Tier AI Revenue Model Components

- Data Center AI: $47+ billion annual enterprise AI revenue

- Gaming Graphics: $10+ billion revenue

- Professional Visualization: Workstation GPU solutions

- Automotive AI: Self-driving vehicle computing platforms

Performance Metrics and Outcome Analysis

Short-Term Market Development (2012‚ 2018)

- Data center revenue rising from $339M to $2.9B

- 80%+ market share in AI training accelerators

- CUDA becoming global AI research standard

- Market cap growing from $8B to $100B+

Long-Term Strategic Dominance (2019‚ 2024)

- 95%+ share of AI training chip market

- Revenue explosion from $11.7B ‚Üí $79B+

- Valuation exceeding $3 trillion

- Enabling LLM and generative AI revolution

“AI did not accelerate on its own‚ NVIDIA built the engine that accelerated AI.”

Conclusion and Strategic Implications

Jensen Huang’s NVIDIA AI transformation demonstrates that visionary technology pivots during early market development can create trillion-dollar competitive advantages when supported by sustained innovation commitment and ecosystem development. The NVIDIA model proves that companies with specialized capabilities can anticipate and enable technology revolutions, transforming from component suppliers into platform leaders that define entire industries.

Leave a comment